Unsettling Findings from Our Experiment Using ChatGPT vs. AI Text Detection Tools

Numerous AI tools that can generate text that resembles human language have been developed as a result of the ongoing competition between chatbots in Silicon Valley. Many people are baffled by this development.

With the development of software that can quickly produce quality essays on any given topic, educators are faced with a difficult decision. They are debating issues like whether to bring back traditional paper-and-pencil exams, step up exam supervision, or outlaw AI-generated content entirely.

The most effective way to address the problem of AI-generated text in education is through the accurate differentiation between AI-generated and human-written text, even though many solutions that have been suggested,

The Conversation investigated a number of suggested methods and tools for identifying text written by AI. Each of them has its limitations, can be worked around, and is unlikely to ever be as accurate as we would like.

You might be wondering why AI companies, despite having state-of-the-art technology, are unable to distinguish between text produced by AI and text written by humans. Surprisingly, the answer is simple: the aim of the current race in artificial intelligence (AI) is to teach NLP AIs to produce outputs that closely resemble human writing. In fact, the need for a reliable way to recognise text produced by artificial intelligence may seem to go against the program's very intent.

A poor attempt

Late in January, OpenAI unveiled a tool that can categorise text produced by AI.

A "classifier for identifying AI-written text" that was trained on both external AI tools and the business's own text-generating engines was made available by OpenAI in January. This suggests that in addition to essays produced by ChatGPT, the classifier can also recognise essays created by BLOOM AI or other comparable software.

At best, we can give this classifier a C-. Only 26% of AI-generated text can be correctly identified by OpenAI (true positive), while only 9% of human-written text can be mistakenly identified as AI-generated (false positive).

Their study found that the classifier fails to recognise AI-generated text as such in about 17% of instances, which is known as the false-negative rate.

A potential candidate

One promising candidate was created by a Princeton University student over the course of his holiday break.

The initial version of GPTZero was released in January by computer science major and journalism minor Edward Tian.

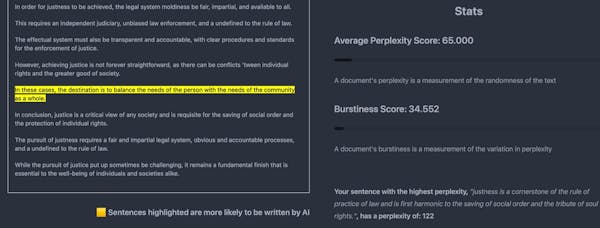

Perplexity and burstiness are the two metrics used by GPTZero, to identify text produced by AI. While burstiness examines the variation between sentences, perplexity gauges the complexity of a text. A text is more likely to have been produced by AI if it has low values for both metrics.

We compared this humble David with the vast ChatGPT.

Initially, The Conversation gave ChatGPT a writing assignment on justice and received a brief essay in return. Then they entered the entire essay into GPTZero without making any changes. Given the extremely low average perplexity and burstiness scores, Tian's programme concluded that the text was likely written entirely by an AI.

Classifiers being misled

Adding a few synonyms to a few words can easily fool AI classifiers. For this purpose, websites that provide tools to translate AI-generated text already exist and are becoming more popular.

Some of these tools display their own telltale signs of AI-generated text, such as the use of awkward or unnatural wording, such as "tortured phrases," which can include uncommon or uncommonly combined words, or grammatically or illogically incorrect phrases. For example, "AI" might be changed to "counterfeit consciousness."

We put GPTZero through a more thorough test by using a version of ChatGPT's justice essay that had been modified by GPT-Minus1, a website that claims to "scramble" ChatGPT text, using synonyms. The image on the left represents the original essay, and the image on the right depicts the modifications made by GPT-Minus1, which affected about 14% of the text.

Source: www.theconversation.com

The GPT-Minus1 version of the justice essay was tested in GPTZero, and the results showed that, with a few sentences having low perplexities, the text was most likely written by a human.

The tool identified one sentence that it believed to be most likely produced by AI (as seen in the left image below). Additionally, it gave information on the essay's overall perplexity and burstiness scores, which were noticeably higher (as shown in the right image below).

Source: www.theconversation.com

Though promising, tools like GPTZero are not flawless and may be open to workarounds. For instance, a current YouTube tutorial shows how to instruct ChatGPT to produce text with high levels of perplexity and burstiness, making it more challenging for classifiers like GPTZero to tell the difference between text produced by humans and text produced by AI.

Watermarking

Another suggestion is to add a computer-detectable invisible "watermark" to text produced by AI.

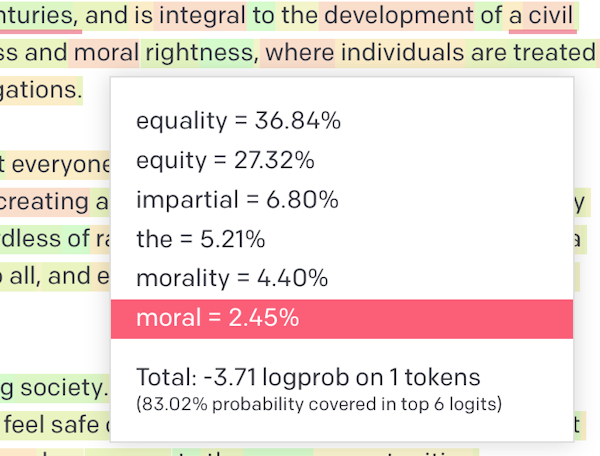

When using a natural language model, each word is individually chosen based on statistical probability.

Natural language models don't always choose the words that are most likely to appear together when choosing words based on statistical probabilities. Instead, they choose a word at random from a list of likely candidates, with words with higher probability scores having a higher chance of being chosen.

Each time a user generates text using the same prompt, the natural language models' randomness in word selection from a list of probable words produces a different output.

Source: www.theconversation.com

The process of limiting the AI to only choosing words from a predetermined list of "whitelisted" words while "blacklisting" other likely words is essentially what is meant by the term "watermarking." This method may be used to distinguish human-written text from text produced by artificial intelligence because human-written text is likely to contain words from the "blacklist".

A persistent arms race

As AI-generated text detectors improve, an ongoing competition is unfolding. TurnItIn, an anti-plagiarism service, has announced a new AI writing detector with an asserted accuracy rate of 97%.

Text generators advance at the same rate as AI-generated text detectors. Bard, Google's ChatGPT rival, is currently undergoing limited public testing, and GPT-4, a significant update from OpenAI, is expected later this year.

OpenAI recognises that there will always be creative ways to fool AI text identifiers and that it will never be possible to make them perfect.

The Conversation may see an increase in the practise of "contract paraphrasing" as this competition between AI text detectors and text generators continues. People will hire someone to modify their AI-generated work in a way that would evade detection by the detectors rather than hiring someone to write their assignment.

Teachers must navigate a difficult challenge when dealing with AI-generated text. It will also require new methods of teaching and assessment, which may include using AI capabilities, even though technical solutions may be helpful.

The Conversation been working on open-source AI tools for education and research for the past year. The Conversation want to find a solution that strikes a balance between the old and the new. At Safe-To-Fail AI, these tools are accessible in beta form.

Interested in the latest updates on AI technology? Follow us on Facebook and join our group (Link to Group) to leave your comments and share your thoughts on this exciting topic!